The following was written by a human, and augmented by ChatGPT. Menopausey will always be transparent about when/where generative AI is used in writings. Most of the visuals on the site have been generated by AI tools.

Human:

New experiments and new open-source content!

I (Menopausey’s Founder, Becca Williams) have been exploring generative AI in various capacities for the last year or so. As an artist and a technologist, I am sitting in the middle of camp terrified, hopeful, and excited because this stuff will augment human intelligence in so many incredible ways, so let’s be intentional and responsible at every step.

I also periodically explore automation to speed up various things, so this post will highlight a number of tools in the current toolbox.

Finally, on to the new stuff:

- I’ve open-sourced some of my research to highlight perimenopause-related resources, apps, and companies on this page

- I’m sending perimenopause and menopause related headlines to this page every couple of hours (you’ll see the latest 10 headlines for each)

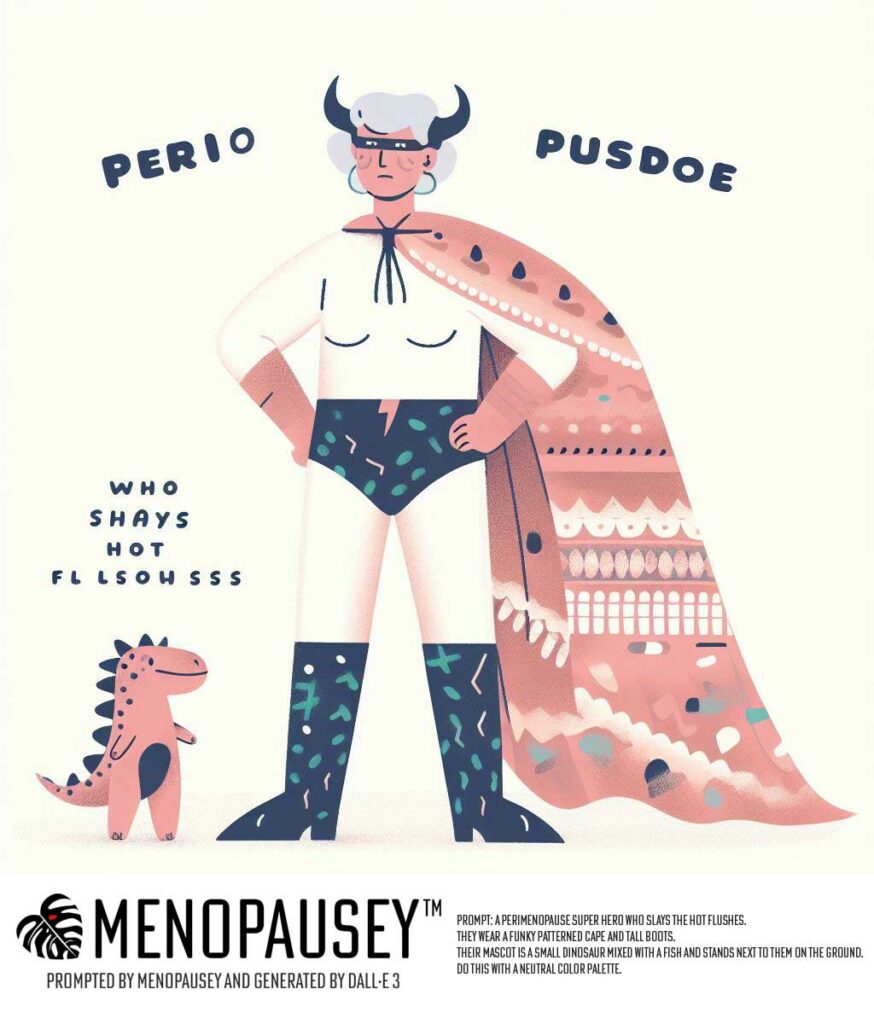

- Some funny/not funny content experimentations with DALL·E 3

- My prompt: A perimenopause super hero who slays the hot flushes. They wear a funky patterned cape and tall boots. Their mascot is a small dinosaur mixed with a fish and stands next to them on the ground. Do this with a neutral color palette.

- Devil’s horns on the first? Really?!?!?! No #AIBias in that, is there?

No thoughtful use of generative AI is complete without also considering more dystopian possible futures, so I’d also like to highlight organizations doing really important work in the space of surfacing algorithmic bias below.

List generated by ChatGPT

Prompt: which nonprofits are researching algorithmic bias?

| Organization | Summary |

| AI Now Institute | Interdisciplinary research center studying the social implications of AI, including issues like algorithmic bias. |

| Algorithmic Justice League (AJL) | Organization raising public awareness about the social impacts of AI and combating algorithmic bias and injustice. |

| Data & Society | Research institute investigating social and cultural issues related to technology, with a focus on the consequences of data practices and algorithmic bias. |

| Electronic Frontier Foundation (EFF) | Defends civil liberties in the digital world, focusing on issues related to algorithmic transparency and bias. |

| Perception Institute | Uses research to create solutions that address bias and discrimination, including implicit bias and its impact on society. |

| The Center for Democracy & Technology (CDT) | Nonprofit working to protect digital civil liberties, advocating for policies to address algorithmic discrimination. |

| The Markup | Nonprofit newsroom investigating the societal impacts of technology, focusing on how institutions use algorithms. |

| Upturn | Advocates for equity and justice in digital technology, focusing on how systems impact minority and marginalized groups. |